Source of book image: http://www.umcs.maine.edu/~chaitin/

(p. A13) What, exactly, is information? Prior to Shannon, Mr. Gleick notes, the term seemed as hopelessly subjective as “beauty” or “truth.” But in 1948 Shannon, then working for Bell Laboratories, gave information an almost magically precise, quantitative definition: The information in a message is inversely proportional to its probability. Random “noise” is quite uniform; the more surprising a message, the more information it contains. Shannon reduced information to a basic unit called a “bit,” short for binary digit. A bit is a message that represents one of two choices: yes or no, heads or tails, one or zero.

For the full review, see:

JOHN HORGAN. “Little Bits Go a Long Way; The more surprising a message, the more information it contains.” The Wall Street Journal (Tues., March 1, 2011): A13.

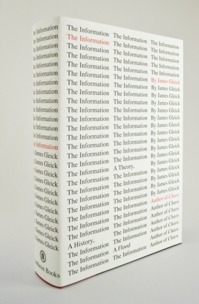

Book being reviewed:

Gleick, James. The Information: A History, a Theory, a Flood. New York: Pantheon Books, 2011.