Source of graph: online version of the WSJ article quoted and cited below.

(p. B1) SAN FRANCISCO–Since Apple Inc. launched the iPhone in June 2007, the smartphone revolution it unleashed has changed the way people work and socialize while reshaping industries from music to hotels.

It also has transformed the company in ways that co-founder Steve Jobs could hardly have foreseen.

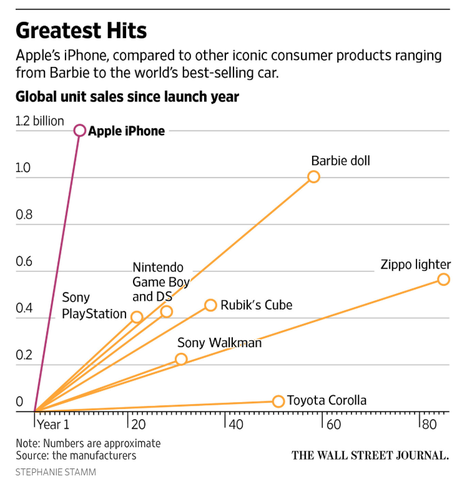

Ten years later, the iPhone is one of the best-selling products in history, with about 1.3 billion sold, generating more than $800 billion in revenue. It skyrocketed Apple into the business stratosphere, unlocking new markets, spawning an enormous services business and helping turn Apple into the world’s most valuable publicly traded company.

. . .

(p. B8) . . . , Apple didn’t open the device to application developers until 2008, when it added the App Store and began taking 30% of each app purchase.

Since then, app sales have generated roughly $100 billion in gross revenue as Apple has registered more than 16 million app developers world-wide.

. . .

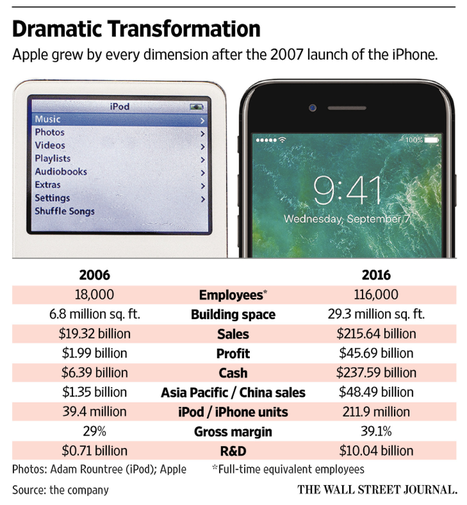

As sales surged, Apple staffed up. The company hired about 100,000 people in the 10-year span, bringing its global workforce to 116,000 from 18,000 in 2006. New workers were brought on to manage relationships with cellphone carriers, double the number of retail stores and maintain an increasingly complex supply chain.

Source of graph: online version of the WSJ article quoted and cited below.

For the full story, see:

Tripp Mickle. “‘How iPhone Decade Reshaped Apple.” The Wall Street Journal (Wednesday, June 21, 2017): B1 & B8.

(Note: ellipses added.)

(Note: the online version of the story has the date June 20, 2017, and has the title “Among the iPhone’s Biggest Transformations: Apple Itself.”)