Paul Ehrlich (left) and Julian Simon (right). Source of caricature: online version of the WSJ review quoted and cited below.

Paul Ehrlich (left) and Julian Simon (right). Source of caricature: online version of the WSJ review quoted and cited below.

(p. C6) . . . in 1980 Simon made Mr. Ehrlich a bet. If Mr. Ehrlich’s predictions about overpopulation and the depletion of resources were correct, Simon said, then over the next decade the prices of commodities would rise as they became more scarce. Simon contended that, because markets spur innovation and create efficiencies, commodity prices would fall. He proposed that each party put up $1,000 to purchase a basket of five commodities. If the prices of these went down, Mr. Ehrlich would pay Simon the difference between the 1980 and 1990 prices. If the prices went up, Simon would pay. This meant that Mr. Ehrlich’s exposure was limited while Simon’s was theoretically infinite.

. . .

In October 1990, Mr. Ehrlich mailed a check for $576.07 to Simon.

. . .

Mr. Ehrlich was more than a sore loser. In 1995, he told this paper: “If Simon disappeared from the face of the Earth, that would be great for humanity.” (Simon would die in 1998.)

. . .

Mr. Sabin’s portrait of Mr. Ehrlich suggests that he is among the more pernicious figures in the last century of American public life. As Mr. Sabin shows, he pushed an authoritarian vision of America, proposing “luxury taxes” on items such as diapers and bottles and refusing to rule out the use of coercive force in order to prevent Americans from having children. In many ways, Mr. Ehrlich was an early instigator of the worst aspects of America’s culture wars. This picture is all the more damning because Mr. Sabin paints it not with malice but with sympathy. A history professor at Yale, Mr. Sabin shares Mr. Ehrlich’s devotion to environmentalism. Yet this affinity doesn’t prevent Mr. Sabin from being clear-eyed.

At heart, “The Bet” is about not just a conflict of men; it is about a conflict of disciplines, pitting ecologists against economists. Mr. Sabin cautiously posits that neither side has been completely vindicated by the events of the past 40 years. But this may be charity on his part: While not everything Simon predicted has come to pass, in the main he has been vindicated.

. . .

Mr. Ehrlich may have been defeated in the wager, but he has continued to flourish in the public realm. The great mystery left unsolved by “The Bet” is why Paul Ehrlich and his confederates have paid so small a price for their mistakes. And perhaps even been rewarded for them. In 1990, just as Mr. Ehrlich was mailing his check to Simon, the MacArthur Foundation awarded him one of its “genius” grants. And 20 years later his partner in the wager, John Holdren, was appointed by President Obama to be director of the White House Office of Science and Technology Policy.

For the full review, see:

JONATHAN V. LAST. “A Prediction that Bombed; Paul Ehrlich predicted an imminent population catastrophe; Julian Simon wagered he was wrong.” The Wall Street Journal (Sat., August 31, 2013): C6.

(Note: ellipses added.)

(Note: the online version of the review has the date August 30, 2013, and has the title “Book Review: ‘The Bet’ by Paul Sabin; Paul Ehrlich predicted an imminent population catastrophe–Julian Simon wagered he was wrong.”)

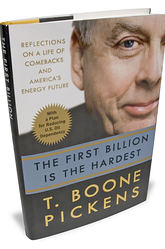

The book discussed above is:

Sabin, Paul. The Bet: Paul Ehrlich, Julian Simon, and Our Gamble over Earth’s Future. New Haven, Conn.: Yale University Press, 2013.

Source of book image: http://paulsabin.com/wp-content/uploads/2013/06/sabin_the_bet_wr.jpg

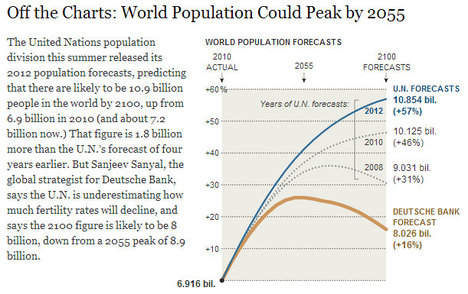

Source of graph: online version of the NYT article quoted and cited below.

Source of graph: online version of the NYT article quoted and cited below.