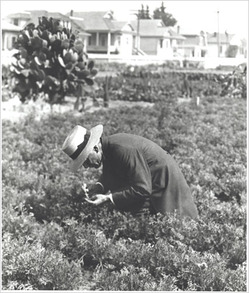

“Luther Burbank pollinating poppies in Santa Rosa, Calif.” Source of book image: online version of the NYT review quoted and cited below.

(p. C4) There is a particular type of potato at the heart of Jane S. Smith’s book about Luther Burbank, a man who described himself as an “evoluter of new plants.” Ms. Smith nicknames that potato “the lucky spud.” That turn of phrase is one of many reasons to appreciate “The Garden of Invention,” her colorful, far-reaching book about the genetic, agricultural, economic and legal issues raised by Burbank’s life and legend.

. . .

This book takes more than a passing interest in Burbank’s income, insofar as it reflected his legal ability to protect his scientific advances. In his early professional years he grappled with the doctrine that held that while a gold mine was real property and a machine to extract gold was intellectual property, the actual mineral belonged to anyone who could find it; ditto with potatoes. Throughout his career, even as he developed friendships with tycoons like Ford and Thomas Edison, Burbank lived under constant financial pressure to keep creating new plant products. “His income was entirely dependent on his latest marvel,” Ms. Smith writes

.

For the full review, see:

JANET MASLIN. “Books of The Times; The Curious Man Lucky Enough to Create ‘the Lucky Spud’.” The New York Times (Mon., May 4, 2009): C4.

(Note: ellipsis added.)

(Note: the online version of the article is dated May 3, 2009.)

The book being reviewed, is:

Smith, Jane S. The Garden of Invention: Luther Burbank and the Business of Breeding Plants. New York: The Penguin Press, 2009.